The WordPress Backend Performance Benchmarks compared three prominent hosting providers – Cloudways Autonomous, Kinsta, and WP Engine – using a rigorous methodology. The study aimed to assess the platforms’ capabilities in handling an extensive e-commerce workload with WooCommerce.

The testing environment, server configuration, and test cases were meticulously designed to simulate real-world scenarios. The focus was on critical e-commerce metrics, particularly “add to carts per second” and “checkouts per second.”

K6, an open-source load testing framework, was employed for the benchmarks. The tests covered scenarios like homepage and shop page interactions, customer checkout journeys, and existing customer login and order history views. Testing occurred at low, medium, and high concurrency levels to evaluate performance under varying loads.

Methodology

Environment Setup

We used the latest WordPress core software, along with any additional plugins or configurations that the hosting service provided out of the box (without the need to explicitly configure). Some providers came with a default premium theme too, however we opted to use the widely adopted Storefront theme by WooCommerce, as it is well written (from a performance point of view) and showcases most of the features we wanted to test.

Of course we didn’t want to test an empty site, so we wrote a few CLI commands (as a plugin) that would help us populate an empty WordPress site with registered users, posts, pages, media uploads, WooCommerce products, as well as orders assigned to registered users. The baseline we established for the amount of generated content per environment tested was:

- 1000 users with a customer role (+ 1 admin user)

- 500 posts (published by the admin user)

- 100 pages (published by admin)

- 300 media uploads, randomly selected from 10 sample JPEG images, 30-40K in size

- 100 products

- 1000 orders with round-robin assigned products from a subset of the first ten

In addition to these, our generation script also updated several WooCommerce and Storefront settings, so that customers (or processes in our case) were able to complete a full checkout. We used Woo’s “cash on delivery” payment method, to eliminate a third-party payment gateway provider from the benchmark.

The plugin we wrote also exposed the available usernames on the WordPress installation via a REST API endpoint, which was used during the setup phase of our customer login benchmark scenario, to determine which users can be used for the test.

Server Configuration

With all three hosting environments we did not have any visibility or control over the server configuration. We opted in for the recommended starter plan for a WooCommerce store, and arranged our load testing schedule with the support staff, to make sure we weren’t triggering any security measures and rate limiting.

We also had no additional control over any caching strategies employed by each provider, although we did our best to by-pass any full page caching configurations for a fair comparison.

Testing Tools

K6 was used to run all the benchmarks. It’s an open-source load testing framework written in Go, which supports a wide range of configuration options, and JavaScript-based test scenarios, with plenty of features, including per-user cookies, custom headers, ramp up/down periods and more.

The DigitalOcean cloud was used for our source testing environment, with lon1 and fra1 locations, which showed the best latency to the target hosting service providers origin servers. We determined the origin servers location from their public IPv4 addresses. The testing server specification was: AMD 4GB RAM, 2vCPU, 80GB SSD with an additionally configured 4GB swap space.

Test Cases

We wrote three tests for K6 which cover most of the intensive workload needed to survive a big e-commerce sale event.

- Hammered the home page and performed a search on the /shop/ page

- A full customer checkout journey from visiting the home page to the final “order received” page

- An existing customer login and order history view test

All the tests were designed to by-pass any full-page caching at the CDN, load balancer or PHP/origin level, however transient caching and/or object caching was still available, which is why we’ve primed the targets with similar but shorter-duration tests to make sure cold starts are not affecting our results.

The target test duration was 20 minutes in all cases, with a 5 minute ramp-up period and a 30 second cooldown period. The concurrency level ranged from 5 to 500, depending on the test and the target provider. We stopped testing with higher concurrency when we hit a high failure/error rate or timeouts. The timeouts were set at 60 seconds.

While our initial plan was to cover all three test cases, we did face a challenge logging in at scale due to rate limits and other security limitations, skewing the test results, so we opted to focus on our checkout journey instead.

Metrics

While we measured the usual metrics (requests per second, throughput, response times, etc.) we wanted this particular benchmark to focus on what is more relatable in the e-commerce world, so we selected “add to carts per second” and “checkouts per second” as our key performance metrics (coming from our second test scenario).

These metrics were captured within the test scripts on add-to-cart/checkout success using K6 counters.

Results

The results are focused on our checkout journey test and the key performance metrics: add to cart events per second, and successful checkouts per second. We ran these at different concurrency levels starting from 5, all the way up to 500 or until we saw a high error/failure rate.

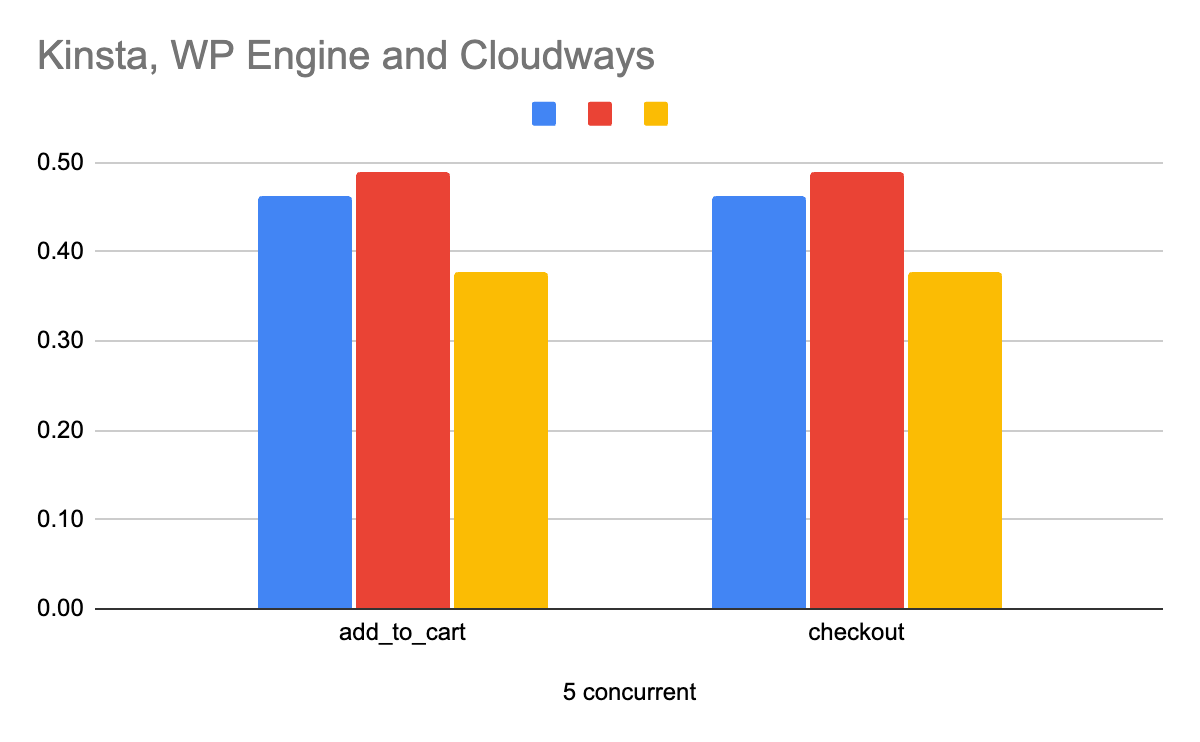

Low Concurrency

Kinsta: at low concurrency (5 users) we saw a stable 0.46 add to carts and checkouts per second with Kinsta with a stable average response time of 0.382 seconds. The entire test took 4886 requests with an average of 3.24 requests per second for the duration of the test (25 minutes and 30 seconds).

WP Engine: Slightly better add to carts and checkouts per second (0.49) with a slightly shorter average response time (0.307), 5173 total requests and a 3.38 average RPS.

Cloudways: Slightly worse than both WP Engine and Kinsta in this low-concurrency tests, at 0.38 add-to-carts and checkouts per second, a 0.698 second average response time, 3990 requests and a 2.61 RPS average.

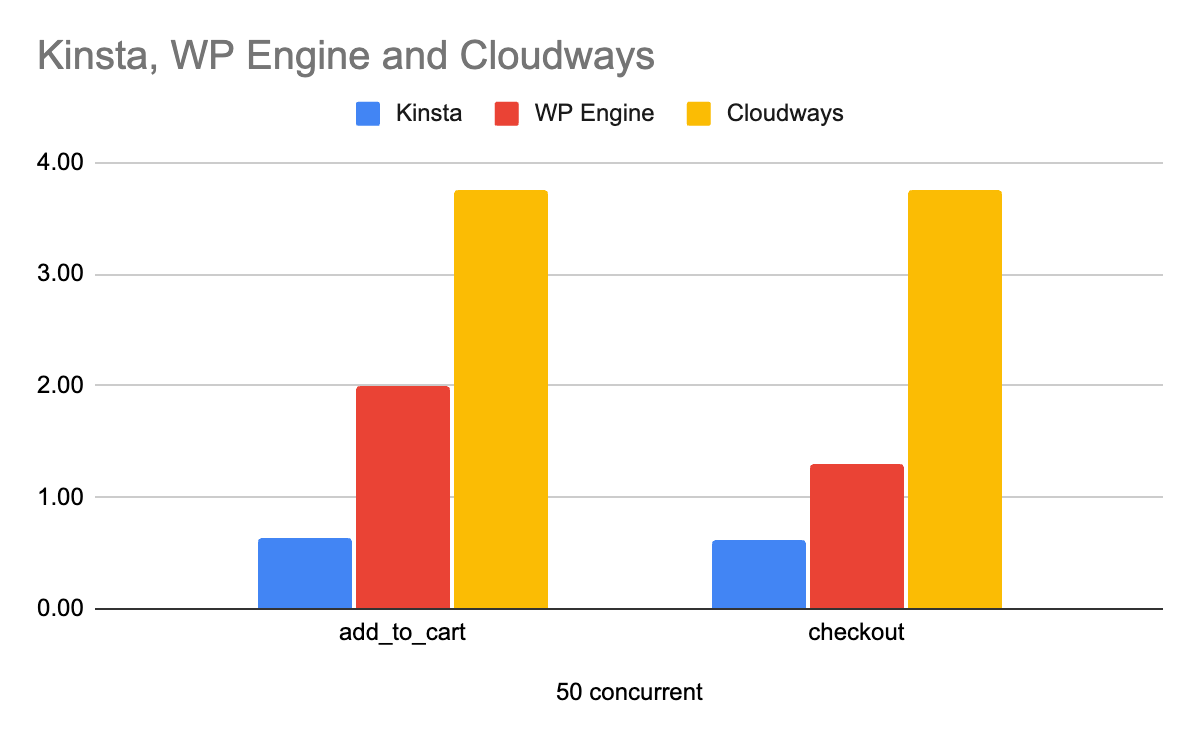

Medium Concurrency

At 50 concurrent users Kinsta saw 0.63 and 0.62 add-to-carts and checkouts respectively, however this did seem to come at a significant cost, averaging a 9.19 seconds response time. The test resulted in 6728 requests at 4.40 average RPS.

WP Engine did significantly better at this concurrency level, with 2.0 add to carts and 1.29 checkouts per second. The average response time was only 1.02 seconds, handling 25216 requests at 16.48 RPS. We did see over 9000 failed requests though, bringing the failure rate to 26.59%. This was a sign that we were running into a number of worker + worker backlog limitation with WP Engine, which we further confirmed with our next test.

Cloudways did significantly better than both WP Engine and Kinsta in this 50 concurrency test with 3.76 add to carts and checkouts per second, with an impressive 0.702 second average response time and 39781 total requests, clocking in at an average of 26 requets per second with no failures.

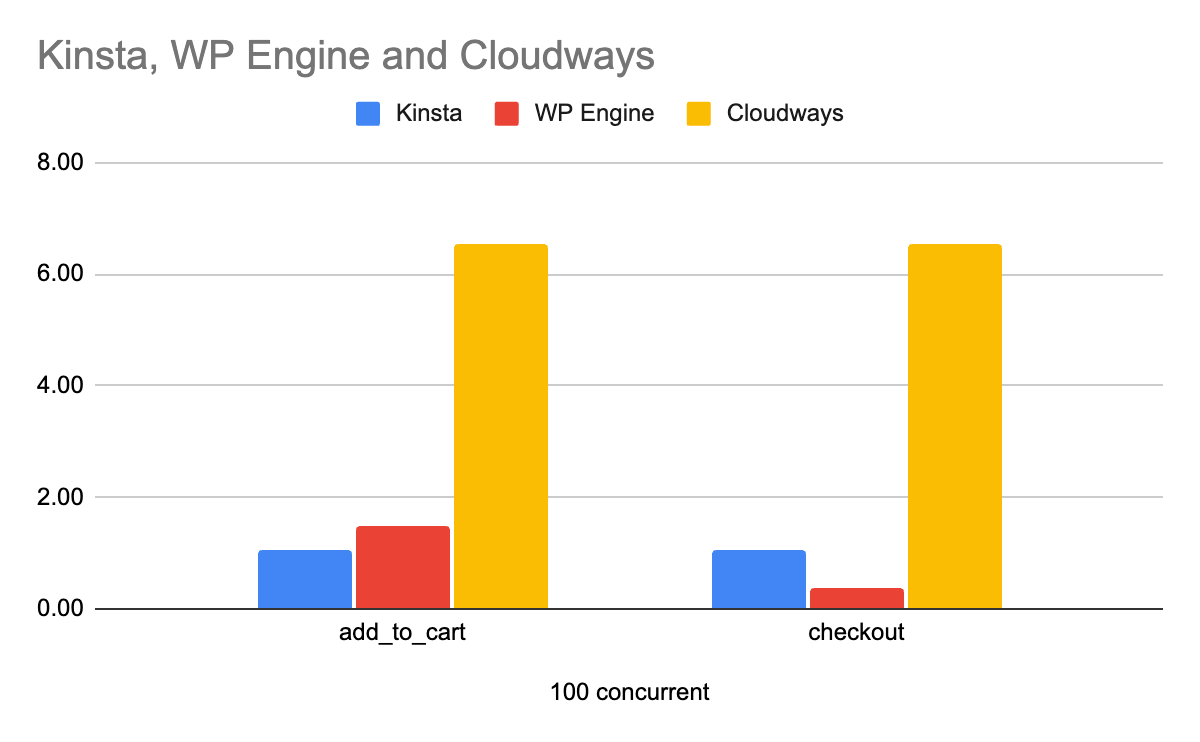

High Concurrency

With 100 concurrent users Kinsta managed to handle 1.07 and 1.04 add to carts and checkouts per second respectively, an increase from the previous concurrency, but with a continued downtrend on the response time, with an average of 11.13 seconds. The total requests handled was 11304, resulting in 7.39 RPS for the duration of the test.

WP Engine’s 100 concurrency test was slightly worse than its previous test across the board, with only 1.49 add to carts and 0.39 checkouts per second, with an average response time of 0.387 seconds. The total number of requests increased slightly to 29907, with an average of 19.55 RPS. The failure rate was over 70% for this concurrency, further confirming the low PHP backlog limit and number of workers, allowing requests to fail earlier, in exchange for a significantly better response time for those requests that succeed.

Cloudways did really well with the 100 concurrency test, handling 6.54 checkouts and add-to-cart events per second with an average response time of 0.956 seconds. The test resulted in 69207 successful requests, or 45.23 requests per second on average.

200+ concurrency

We had also planned to run higher concurrency tests across the three providers, but were limited in success.

Kinsta add-to-carts and checkout metrics did not increase of the 200 and 500 concurrency tests. The average response times however skyrocketed to 20+ seconds for the 200 concurrency test, and reached our 60 second timeout on the 500 concurrency test. This suggests that Kinsta has a really large backlog for PHP workers and is more than happy to keep requests there for as long as it takes to serve them.

WP Engine’s approach seems quite different – they seem to prioritize serving requests fast and failing early if there aren’t enough resources to handle the incoming requests, hence the shorter/limited PHP worker backlog size. With a 200 concurrency test we immediately hit 429 errors, suggesting we hit their per IP rate limit. Our plan B was to split the test out across multiple IPs, but at a 70% error rate with 100 concurrent users, we didn’t bother to test this any further.

Cloudways did fairly well with the 200 concurrency test, delivering 6.68 add to carts and 6.84 checkouts per second, while maintaining a still somewhat decent 2.72 seconds average response time and an insignificant error rate. We’ve also managed to complete our 500 and 1000 concurrency tests with Cloudways (from multiple test locations) although we did hit a significant amount of errors (including timeouts) at those levels.

Error Analysis

While it would have been interesting to see what’s going on at the servers level, this was a black box test, so we could only judge based on the response codes, headers and timings.

Most of the errors we’ve seen with Kinsta and Cloudways were timeout errors, so connections weren’t dropped. Since both are behind a CDN, we’ve seen some errors where the origin server appeared to be offline to the CDN, resulting in 503 errors. WP Engine’s setup with the low backlog limit resulted in more 504/502 errors and ultimately 429 errors when we hit their rate limits.

None of the three platforms failed beyond recovery, the sites were available and fast almost immediately after the tests were completed or interrupted.

Conclusion

This benchmark highlighted the strengths and limitations of Cloudways, Kinsta, and WP Engine in managing e-commerce workloads. Cloudways Autonomous emerged as a robust performer across various concurrency levels, showcasing reliability and speed. WP Engine demonstrated efficiency at medium loads but faced scalability issues at high concurrency. Kinsta, while stable at low concurrency, encountered challenges in maintaining performance as loads increased.

Error analysis indicated that all platforms recovered well after testing, emphasizing their overall resilience. The study provides valuable insights for businesses seeking hosting solutions for WordPress e-commerce sites, enabling informed decisions based on performance metrics relevant to real-world scenarios.

You can find the raw data for our checkout test in this spreadsheet. If you have any questions or feedback about this benchmark, please don’t hesitate to contact us at hi@koddr.io.